“When 2 or more instances of the same Power Automate flow are running at the same time, the data manipulation turns into a complete mess, how can I add a delay between the flow runs?”

When you use an automated trigger in a flow, it’ll trigger every time the event happens. In most situations it’s a desired functionality, even if that means running multiple flows at the same time. There’s no need to wait for anything, all flows can run right away.

But in some situations it’s unwanted behaviour. If the flow works with some shared data, or if it uses some external service, parallel runs of multiple instances can lead to a problem. Multiple flows manipulating the same data at the same time can easily turn it into a complete mess. Calling an external service from multiple flows at the same time can lead to requests rejection and flow failures. In such cases it’s better to run only one flow instance at a time.

Run only one flow at a time

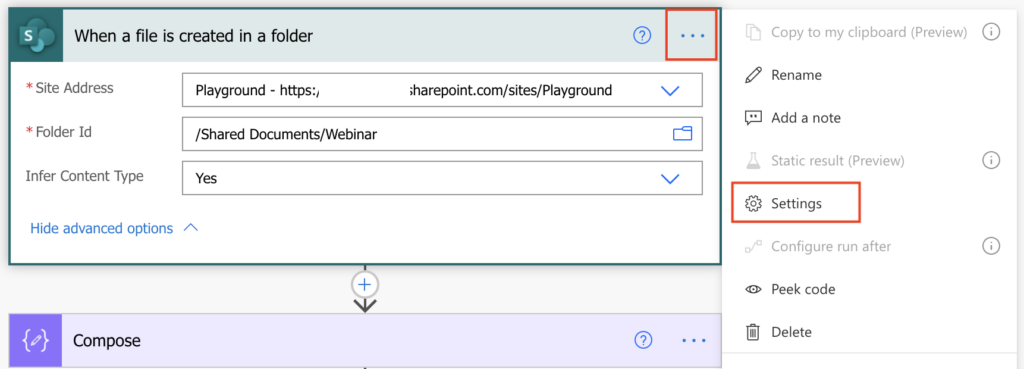

Since we’re talking about triggering a flow, the configuration must be done in the trigger. Go to the trigger ‘Settings’ under the 3 dots.

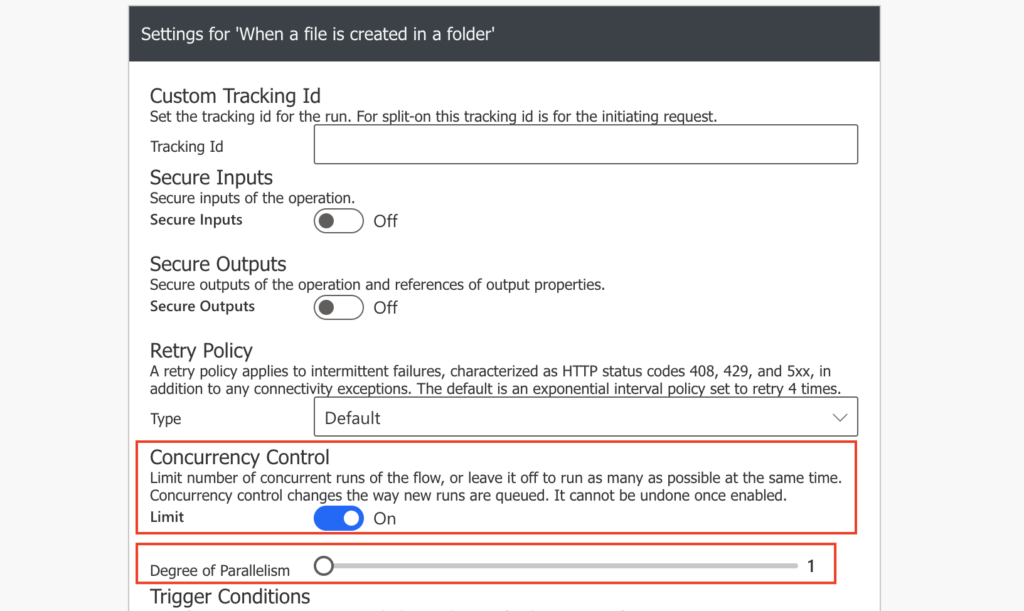

The ‘Concurrency Control’ section allows you to limit the number of flow instances running at the same time. As you want to run only one flow, switch the Limit on and set the parallelism to 1 flow.

With this configuration there’ll be always only 1 flow running. All the other flow runs will wait in the queue until the previous one finishes.

Note: just to make sure: the setting applies only to the specific flow, not all your flows.

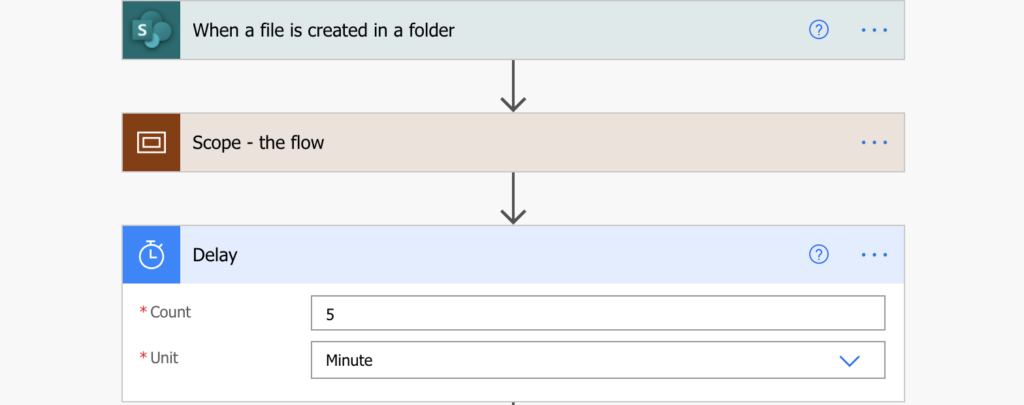

Add a delay between the flow runs

All you need to add a delay between the flows is to add the ‘Delay’ action at the end of the flow. The parallelism will allow flows to run one by one, and the ‘Delay’ will delay the end of the flow. Until the current flow ends, until it completes the ‘Delay’, no other flow instance will be started.

Summary

Adding a delay between multiple Power Automate flow runs has 2 steps. Firstly, configure the trigger to run only 1 flow at a time. Secondly, add the actual delay as the ‘Delay’ action at the end of the flow. You’ll have always maximum 1 instance of the flow running, the next one triggering earliest after the previous one finishes.

Since you’ll be running flows one by one with a delay, you might see a long queue from time to time. If so, I’d add a trigger condition to run the flow only if necessary and reduce the number of runs.

I use the solution above (without the delay) in a SharePoint based task management process. When a task is closed, I need to check if all the related tasks were also already closed. If it was the last open task, flow will create the subsequent task(s). I can’t risk that users would close the last e.g. 2 tasks at the same time, the flow would trigger twice, it would twice confirm that there’s no other open task, and create twice the subsequent tasks. For that reason there’s always running only one task processing flow, the others waiting for it to finish.

am gathering health reports every day from about 500 students using power automate. Mail sending flow work every day fine. This mail sends URL of responding Forms which is triggered by new response have send. It seems to work fine. Response results were written to Excel tables. But there were some lost responses to fail to trigger Foms. There is no triggered que or something to hold coming triggers. This is very severe problem because each flow works fine then no errors or warning message display. How can I prevent to these trigger losses?

Hello Masaharu,

that’s interesting, aren’t you reaching the daily limits of API calls? I can imagine that each flow has multiple actions, and if you try to run it 500 times it might be blocked due to high API calls consumptions. I’d try to contact Microsoft as this sounds more like an infrastructure issue than a flow issue.

I’m trying to use this technique to overcome an infinite loop condition with the “When a row is added, modified or deleted” in Dataverse trigger, but still having an issue.

The overall idea is that when a specific “choice” column (Column A) in a Dataverse table is created or changed, copy the label (text value) of that choice to another column (Column B). I can then use the value in Column B in calculated columns. (It’s a limitation that you can’t reference a choice-type column in calculated columns, hence the need to copy the value to another column with Power Automate.)

Because making the change to Column B is itself another modification to the table, that would create an infinite loop with Column B updating over and over. To fix this, I have a trigger condition that only allows the trigger to fire if Column A doesn’t equal Column B.

That’s all great, and it works, except it takes several seconds for the change to Column B to actually update in Dataverse. In the meantime, the flow runs a couple more times to keep updating Column B… which is obviously not needed or desired. Eventually Column B and Column A (the choice column) are equal, and the trigger doesn’t fire anymore.

As your article suggests, I changed the concurrency control to “1”, and added a 20 second delay at the end. This helped, but now the flow runs exactly twice. While the 20 second delay is happening, one more flow run will “queue up” in a “waiting” status. It seems that the trigger condition gets processed (and finds that column A and B are not equal) BEFORE determining that the flow needs to go into the “waiting” queue due to concurrency restriction.

Any thoughts on how to avoid the 2nd flow run?

I think I found the answer. By also setting the “Select columns” parameter in the trigger to Column A, the 2nd flow run can’t happen because the update wasn’t to Column A… it was to Column B. So, it requires a 4-tier approach to making this work:

– Select columns: Column A

– Trigger filter: Column A doesn’t equal column B

– Concurrency of 1

– Delay at the end of the flow to give Dataverse time to update Column B

Hello Kore,

good work finding the solution, I’d just make sure that the synchronisation of the columns is the first action in the flow to avoid risk of another updates in the meantime.